# check if you have a notebook with a GPU

import torch

torch.cuda.is_available()

# If False, Go to Menu > Runtime > Change runtime. Hardware accelerator -> GPU14 Week 7: reaction prediction with Molecular Transformer

We have talked about different reaction prediction methods. Template-based approaches use reaction templates to select the most likely reaction outcome. However, these models are limited by the dataset (the model can just learn reaction classes, and is not able to get deeper features), quality and quantity of the templates (you can only predict reactions that are included in your templates, and the model will be heavily dependent on the quality of the atom mapping), and they cannot predict selectivity.

On the other hand, template-free models can overcome many of these limitations. Today, we will use a language model, the Molecular Transformer, to do chemical reaction prediction. You can check the original paper here.

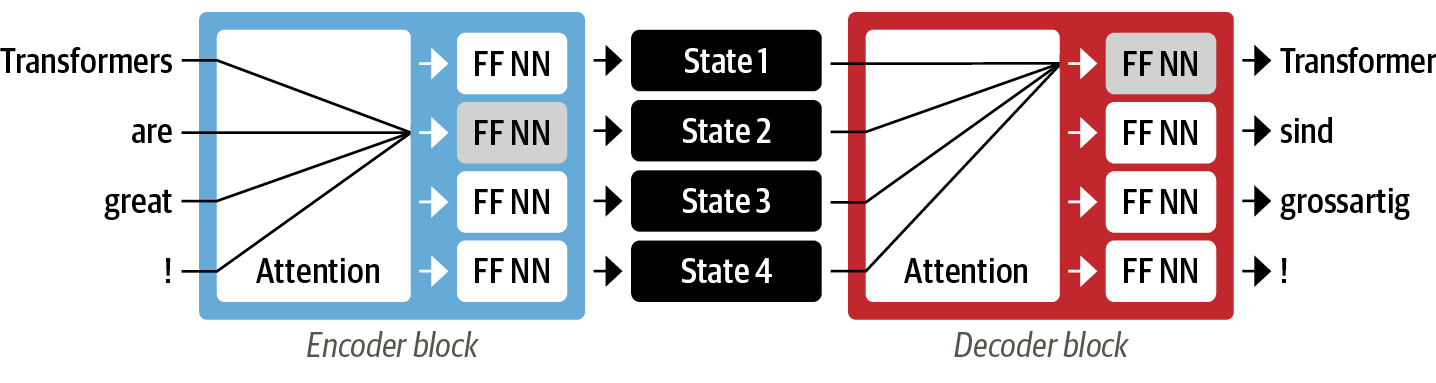

This model is based on the Transformer architecture, which is behind some of the most remarkable AI models of the last years (eg. AlphaFold2 or ChatGPT). Basically, we will treat reaction prediction as a machine translation model, where the model will learn the “grammar of the reactions”. Some of the main advantages of the model are

0. Relevant packages

OpenNMT

OpenNMT is an open-source library for translation tasks. We will use it to create and train our model as it is a flexible and easy to use framework. You can check the documentation here.

We will also use rdkit and other common auxiliary libraries.

!pip install rdkit-pypi==2022.3.1

!pip install pip install OpenNMT-py==2.2.0

!wget https://raw.githubusercontent.com/schwallergroup/ai4chem_course/main/notebooks/07%20-%20Reaction%20Prediction/utils.pyimport re

import os

import gdown

import random

import pandas as pd

from tqdm.auto import tqdm

tqdm.pandas()

from rdkit import Chem

# to display molecules

from rdkit.Chem.Draw import IPythonConsole

from rdkit.Chem import Draw

IPythonConsole.ipython_useSVG=True

# disable RDKit warnings

from rdkit import RDLogger

RDLogger.DisableLog('rdApp.*')

from utils import download_data, canonicalize_smiles, evaluateDownload data

Firstly, we will download the data for training. We will use USPTO, a widely used dataset containing reaction SMILES extracted from the US list of patents from 1976 to 2016.

train_df, val_df, test_df = download_data()After running this cell you should get these sets (check the USPTO_480 folder in your Colab directory):

- Train: we will use these reaction SMILES to train the model

- Validation: used for checking the learning progress during training and hyperparameter tuning

- Test: we will use these data only once we get the final model to test its performanceEach set contains two .txt files, one containing the precursors (reactants, src) and another containing the targets (products, tgt). Remember that our task can be seen as a translation between two sequences, reactants and products SMILES in this case.

Tokenization

We already mentioned tokenization in the previous tutorial when training the LSTM. To be able to train a language model, we need to split the strings into tokens.

We take the regex pattern introduced in the Molecular Transformer paper.

SMI_REGEX_PATTERN = r"(\%\([0-9]{3}\)|\[[^\]]+]|Br?|Cl?|N|O|S|P|F|I|b|c|n|o|s|p|\||\(|\)|\.|=|#|-|\+|\\|\/|:|~|@|\?|>>?|\*|\$|\%[0-9]{2}|[0-9])"

def smiles_tokenizer(smiles):

smiles_regex = re.compile(SMI_REGEX_PATTERN)

tokens = [token for token in smiles_regex.findall(smiles)]

return ' '.join(tokens)

print('Tokenizing training set')

train_df['tokenized_precursors'] = train_df.precursors.progress_apply(lambda smi: smiles_tokenizer(smi))

train_df['tokenized_products'] = train_df.products.progress_apply(lambda smi: smiles_tokenizer(smi))

print('Tokenizing validation set')

val_df['tokenized_precursors'] = val_df.precursors.progress_apply(lambda smi: smiles_tokenizer(smi))

val_df['tokenized_products'] = val_df.products.progress_apply(lambda smi: smiles_tokenizer(smi))

print('Tokenizing test set')

test_df['tokenized_precursors'] = test_df.precursors.progress_apply(lambda smi: smiles_tokenizer(smi))

test_df['tokenized_products'] = test_df.products.progress_apply(lambda smi: smiles_tokenizer(smi))Save the preprocessed data set

Don’t forget to shuffle the training set before saving it. At least earlier versions of OpenNMT-py would not shuffle it during preprocessing. After that, we save the tokenized files, which are now ready to use for training.

shuffled_train_df = train_df.sample(frac=1., random_state=42)

data_path = 'USPTO_480k_preprocessed'

os.makedirs(data_path, exist_ok=True)

with open(os.path.join(data_path, 'precursors-train.txt'), 'w') as f:

f.write('\n'.join(shuffled_train_df.tokenized_precursors.values))

with open(os.path.join(data_path, 'products-train.txt'), 'w') as f:

f.write('\n'.join(shuffled_train_df.tokenized_products.values))

with open(os.path.join(data_path, 'precursors-val.txt'), 'w') as f:

f.write('\n'.join(val_df.tokenized_precursors.values))

with open(os.path.join(data_path, 'products-val.txt'), 'w') as f:

f.write('\n'.join(val_df.tokenized_products.values))

with open(os.path.join(data_path, 'precursors-test.txt'), 'w') as f:

f.write('\n'.join(test_df.tokenized_precursors.values))

with open(os.path.join(data_path, 'products-test.txt'), 'w') as f:

f.write('\n'.join(test_df.tokenized_products.values))Building the vocab

The first step for the OpenNMT-py pipeline is to build the vocabulary.

Please note: - Typical sequence pairs in machine translation are much shorter than the ones you encounter in chemical reaction prediction. Hence, set a src_seq_length and tgt_seq_length that is much higher than the maximum you would expect to include all reactions (in this case we set a value of 1000). - With n_sample set to -1 we include the whole dataset.

The paths to the training and validation datasets are defined in the run_config.yaml:

# Here you can check the documentation to know better how this file works.

# https://opennmt.net/OpenNMT-py/quickstart.html

# Examples in https://github.com/OpenNMT/OpenNMT-py/tree/master/config

## Where the samples will be written

save_data: example_run

## Where the vocab(s) will be written

src_vocab: example_run/uspto.vocab.src

tgt_vocab: example_run/uspto.vocab.src

# Prevent overwriting existing files in the folder

overwrite: true

share_vocab: true

# Corpus opts:

data:

corpus-1:

path_src: USPTO_480k_preprocessed/precursors-train.txt

path_tgt: USPTO_480k_preprocessed/products-train.txt

valid:

path_src: USPTO_480k_preprocessed/precursors-val.txt

path_tgt: USPTO_480k_preprocessed/products-val.txtAs the source (precusors) and the target (products) are represented as SMILES and consist of the same tokens, we share the vocabulary between source and target (share_vocab: true).

config_url = 'https://raw.githubusercontent.com/schwallergroup/dmds_language_models_for_reactions/main/example_run/run_config.yaml'

config_folder = 'example_run'

config_name = 'run_config.yaml'

os.makedirs(config_folder, exist_ok=True)

target_path = os.path.join(config_folder, config_name)

if not os.path.exists(target_path):

gdown.download(config_url, target_path, quiet=False)

else:

print(f"{target_path} already exists")Now we can run this command to build our vocabulary file.

! onmt_build_vocab -config example_run/run_config.yaml \

-src_seq_length 1000 -tgt_seq_length 1000 \

-src_vocab_size 1000 -tgt_vocab_size 1000 \

-n_sample -1You can check how the uspto.vocab.src in the example_run folder to see how the vocabulary looks like.

Training the Molecular Transformer

If you look at the run_config.yaml, you will see that we have defined some of the training parameters (but not yet the hyperparameters of the model).

# Train on a single GPU

world_size: 1

gpu_ranks: [0]

# Where to save the checkpoints

save_model: example_run/model

save_checkpoint_steps: 5000

keep_checkpoint: 3

train_steps: 400000

valid_steps: 10000

report_every: 100

tensorboard: true

tensorboard_log_dir: log_dirThe Transformer architecture was published in the Attention is all you need paper by Vaswani et al. (NeurIPS, 2017). The model sizes (65 to 212M parameters) in that paper were larger than what we use for reaction prediction (20M parameters).

Illustrated transformer blogposts: - https://nlp.seas.harvard.edu/2018/04/03/attention.html - https://jalammar.github.io/illustrated-transformer/

The cell below contains the command used to train the model. Here you can set all your hyperparameters. In this case, we will use the values that were published in this paper, where the Molecular Transformer was trained to predict carbohydrate reactions.

# hyperparameters from https://github.com/rxn4chemistry/OpenNMT-py/tree/carbohydrate_transformer

!onmt_train -config example_run/run_config.yaml \

-seed 42 -gpu_ranks 0 \

-param_init 0 \

-param_init_glorot -max_generator_batches 32 \

-batch_type tokens -batch_size 6144\

-normalization tokens -max_grad_norm 0 -accum_count 4 \

-optim adam -adam_beta1 0.9 -adam_beta2 0.998 -decay_method noam \

-warmup_steps 8000 -learning_rate 2 -label_smoothing 0.0 \

-layers 4 -rnn_size 384 -word_vec_size 384 \

-encoder_type transformer -decoder_type transformer \

-dropout 0.1 -position_encoding -share_embeddings \

-global_attention general -global_attention_function softmax \

-self_attn_type scaled-dot -heads 8 -transformer_ff 2048 \

-tensorboard True -tensorboard_log_dir log_dirThe training can take more than 24 hours on a single GPU! Hence, stop the previous cell, we will directly download the trained model :)

trained_model_url = 'https://drive.google.com/uc?id=1ywJCJHunoPTB5wr6KdZ8aLv7tMFMBHNy'

model_folder = 'models'

model_name = 'USPTO480k_model_step_400000.pt'

os.makedirs(model_folder, exist_ok=True)

target_path = os.path.join(model_folder, model_name)

if not os.path.exists(target_path):

gdown.download(trained_model_url, target_path, quiet=False)

else:

print(f"{target_path} already exists")Evaluating the model

We’ll use a pre-made script for this, don’t worry about it now. Basically we want to check what percentage of the predictions are correct (we compute the accuracy of the model). This cell takes around 20 minutes to execute, as we are using the model to predict nearly 40k reactions

!onmt_translate -model models/USPTO480k_model_step_400000.pt -gpu 0 \

--src USPTO_480k_preprocessed/precursors-val.txt \

--tgt USPTO_480k_preprocessed/products-val.txt \

--output models/USPTO480k_model_step_400000_val_predictions.txt \

--n_best 5 --beam_size 10 --max_length 300 --batch_size 64For each reaction, we obtain 5 predictions that can be used to compute the accuracy. The top-n accuracy is the accuracy that includes the best n predictions (for example, top-3 accuracy will consider one prediction as true if the true product matches any of the best 3 predictions). Now run this to compute the accuracy of the model. What do these numbers tell you?

evaluate(n_best=5)Exercise: Test the limits of the Molecular Transformer!

Now it’s your turn! Try to make some predictions using the Molecular Transformer and check its limitations. You can try to explore reactions with challenging stereochemistry or regioselectivity, and see if the model is able to correctly get them.

# It's an example

from rdkit import Chem

from rdkit.Chem import Draw

from rdkit.Chem.Draw import IPythonConsole

from IPython.display import display

def predict_reaction(precursors):

""" predict product of one reaction from its precursors SMILES list

"""

smi_pres = '.'.join(precursors)

smi_pres = smiles_tokenizer(smi_pres)

with open('precursors-try.txt', 'w') as f:

f.write(f'{smi_pres}\n')

os.system("rm preds.txt")

os.system("onmt_translate -model models/USPTO480k_model_step_400000.pt -gpu 0 \

--src precursors-try.txt --output preds.txt \

--n_best 1 --beam_size 5 --max_length 300 --batch_size 64")

with open('preds.txt', 'r') as f:

pred_smi = f.readline().strip()

pred_smi = pred_smi.replace(" ", "")

# print result

print(f"The SMILES of the predicted product is: {pred_smi}")

# Use RDKit to visualize the reactants and product

# precursors

print("\n\nVisualization of the reaction:\n")

print("Precursors:")

precursors_mols = [Chem.MolFromSmiles(smi) for smi in precursors]

[display(mol) for mol in precursors_mols]

print("Product:")

product_mol = Chem.MolFromSmiles(pred_smi)

display(product_mol)

return pred_smi

# Write the SMILES of all precursors for a reaction you want to predict into a list

precursors_smis = [

"COC(=O)c1cc2c3cccc(Cl)c3n(C)c2s1",

"[K+].[OH-]",

]

pred_smi = predict_reaction(precursors_smis)